Bespoke 360 Degree

Questionnaire Design

At no cost... Lumus360 will work with you to put together a bespoke 360 feedback questionnaire that is ‘fit for purpose’

At no cost... Lumus360 will work with you to put together a bespoke 360 feedback questionnaire that is ‘fit for purpose’

Whilst generic, off the shelf questionnaires are OK, they never fully hit the mark!

Developing the questionnaire to ensure it provides insightful feedback against the organisations expectations, context and leadership level is a fundamental part of getting 360 degree feedback right.

Some benefits of using a bespoke questionnaire include:

Great 360 feedback questionnaires are at the heart of every successful 360 tool and our free 360 degree feedback questionnaire design service supports clients to get this right.

Our 360 questionnaire design service, includes developing your questionnaire from any of the following:

Our approach to questionnaire design and the principles behind it align with best practice and are based on 20 years of knowledge and practical experience. This includes things like:

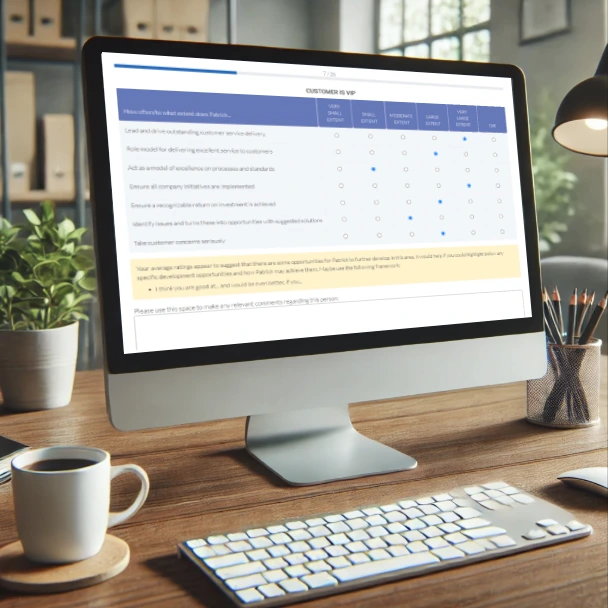

Leading the way in technology, we ensure our 360 questionnaires are presented in a way that enhances user experience whilst providing the following functionality:

The House of Commons approached Lumus360 with a clear objective: to develop a unique upward feedback tool built around the 10 behaviours that mattered most to the organisation. Instead of a standard solution, they needed a bespoke 360 feedback questionnaire design that aligned the entire organisation around what was genuinely important.

The House of Commons approached Lumus360 with a clear objective: to develop a unique upward feedback tool built around the 10 behaviours that mattered most to the organisation. Instead of a standard solution, they needed a bespoke 360 feedback questionnaire design that aligned the entire organisation around what was genuinely important.

The House of Commons approached Lumus360 with a clear objective: to develop a unique upward feedback tool built around the 10 behaviours that mattered most to the organisation. Instead of a standard solution, they needed a bespoke 360 feedback questionnaire design that aligned the entire organisation around what was genuinely important.

A traditional 360 feedback questionnaire template was not the right fit. The House of Commons required a bespoke feedback questionnaire that:

Lumus360 worked closely with key stakeholders from the House of Commons to create a completely custom 360 feedback questionnaire, built from the ground up using Lumus360’s 360 feedback questionnaire design service. The process included:

By using this 360 feedback questionnaire design and development service, Lumus360 ensured that the finished questionnaire met the exact operational requirements of the House of Commons. The questionnaire was delivered through the Lumus360 platform, featuring automatic saving, mobile compatibility and a user-friendly final preview.

The House of Commons successfully launched its tailored upward feedback initiative using this custom 360 feedback questionnaire, with a tool that:

With the support of Lumus360’s bespoke 360 feedback questionnaire design service, the organisation now has ‘fit for purpose’ 180 feedback questionnaire that supports ongoing leadership improvement.